China: A Women in Ad Banner Was Mistaked As Criminal By Face Recognition System

Harin - Nov 26, 2018

A Women in China was mistakenly flagged as a criminal by face recognition system when her photo was on an advertisement banner on a bus.

- Mumbai 2020 Power Outage Might Be A China's Cyberattack, India Official Claims

- TikTok China Banned Users From Making Videos To Show Off Wealth

- Entrepreneur Spent 1 Year Building 600-Square-Meter Floating Mansion

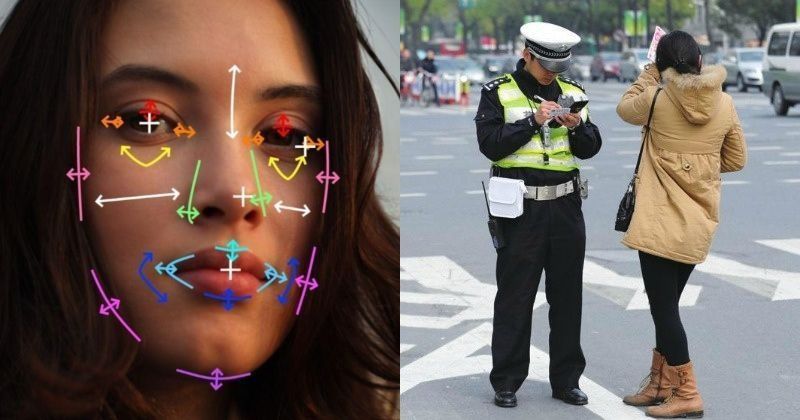

Ever since China introduced the country’s new facial recognition and AI for surveillance systems, technology experts and human rights activists worldwide has been showing their criticism.

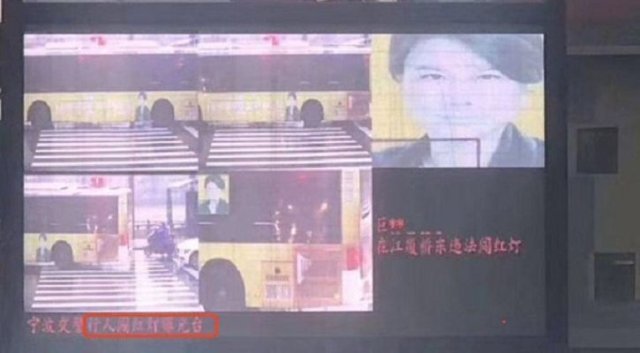

Recently, an embarrassing situation caused by the system has justified those criticisms. Ningbo city’s police force needed to take action after a woman was mistakenly identified as a jaywalker by a road surveillance. The automated system flagged the businesswoman for wrongly crossing a road when a green pedestrian light has not been on. The problem was that not only there was a mistake in the system, the mentioned woman was nowhere near the area.

Cameras have been placed on major intersections and roadways in the country since a long time ago. Later, they were modified to add in facial recognition feature. Human face from a distance can be sensed and captured by these cameras. Those captured images are then compared with government records.

This feature has now also been being used by authorities on crosswalks, to put to shame those pedestrians illegally crossing the roads. The pedestrian’s identity was pinpointed by the AI. When they finished crossing the road, on a digital notice board, their name, photo and government ID will be put on display for other people to see.

The system then went wrong, particularly for Dong Mingzhu. When the camera got her image and put it on the notice board, she wasn’t actually there to cross the road. What happened was that because she’s quite a famous person in the area, her photo was on an advertisement on a bus passing the crosswalk. The AI, mistaked the image as the real Dong Mingzhu, then it automatically flagged her. Later, the local police needed to correct this. An upgrade is said to have been added to the facial recognition system, in order to “lower the incorrect recognition rate”. However, how soon can this be achieved is still remained unknown.

It might sound funny when you first read about it, but there are problems involved here. When order systems with AI and automating law are used, a small false action can affect the victim seriously. Not to also mention, there has already been an underlying problem where non-stop government surveillance can intrude in people’s privacy.

Featured Stories

ICT News - Feb 20, 2026

Tech Leaders Question AI Agents' Value: Human Labor Remains More Affordable

ICT News - Feb 19, 2026

Escalating Costs for NVIDIA RTX 50 Series GPUs: RTX 5090 Tops $5,000, RTX 5060 Ti...

ICT News - Feb 18, 2026

Google's Project Toscana: Elevating Pixel Face Unlock to Rival Apple's Face ID

Mobile - Feb 16, 2026

Xiaomi Launches Affordable Tracker to Compete with Apple's AirTag

ICT News - Feb 15, 2026

X Platform Poised to Introduce In-App Crypto and Stock Trading Soon

ICT News - Feb 13, 2026

Elon Musk Pivots: SpaceX Prioritizes Lunar Metropolis Over Martian Colony

ICT News - Feb 10, 2026

Discord's Teen Safety Sham: Why This Data Leak Magnet Isn't Worth Your Trust...

ICT News - Feb 09, 2026

PS6 Rumors: Game-Changing Specs Poised to Transform Console Play

ICT News - Feb 08, 2026

Is Elon Musk on the Path to Becoming the World's First Trillionaire?

ICT News - Feb 07, 2026

NVIDIA's Gaming GPU Drought: No New Releases in 2026 as AI Takes Priority

Read more

ICT News- Feb 19, 2026

Escalating Costs for NVIDIA RTX 50 Series GPUs: RTX 5090 Tops $5,000, RTX 5060 Ti Closes in on RTX 5070 Pricing

As the RTX 50 series continues to push boundaries in gaming and AI, these price trends raise questions about accessibility for average gamers.

ICT News- Feb 18, 2026

Google's Project Toscana: Elevating Pixel Face Unlock to Rival Apple's Face ID

As the smartphone landscape evolves, Google's push toward superior face unlock technology underscores its ambition to close the gap with Apple in user security and convenience.

ICT News- Feb 20, 2026

Tech Leaders Question AI Agents' Value: Human Labor Remains More Affordable

In a recent episode of the All-In podcast, prominent tech investors and entrepreneurs expressed skepticism about the immediate practicality of deploying AI agents in business operations.

Comments

Sort by Newest | Popular