Google Brain Built A Translator For AI To Explain Itself

Harin - Jan 17, 2019

A Google Brain scientist built a tool which can help AI (artificial intelligence) systems explain how they come to their conclusions.

- New ‘Deep Nostalgia’ AI Allow Users To Bring Old Photos To Life

- Pilots Passed Out Mid-Flight, AI Software Got The Aircraft Back Up Automatically

- YouTube AI Mistakes Black And White In Chess For Racism

A Google Brain scientist decided to build a tool which can assist AI (artificial intelligence) systems in explaining how they draw their conclusions, a task which has always been considered as a tricky one, especially for machine learning algorithms.

The tool is named as TCAV, which is short for Testing with Concept Activation Vectors. It can be connected to machine learning algorithms in order to understand how these algorithms considered different types of data and factors before delivering results.

Tools that are similar to the TCAV are in great demand since AI is under the radar for issues related to gender and racial bias.

With TCAV, facial recognition algorithm user would have the capability to determine the role it played in racial equality when evaluating job application or matching people with a database of criminals. Instead of blindly trusting the results the machines deliver to be fair and objective, people can reject, question and even fix those conclusions.

Been Kim, a Google Brain scientist said to Quanta that she does not demand a tool which can explain thoroughly how a decision-making process of AI goes. It is good enough just to have something that can detect potential issues and help us human understand what and where things may have been wrong.

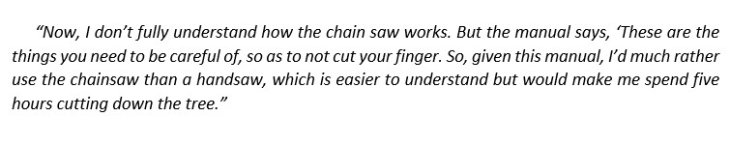

Kim then compared the concept with the act of reading the safety labels before using a chainsaw to cut down a tree.

She said:

Featured Stories

ICT News - Jul 05, 2025

Windows 11 is Now the Most Popular Desktop OS in the World

ICT News - Jul 02, 2025

All About Florida’s Alligator Alcatraz: A Smart Move for Immigration Control

ICT News - Jun 25, 2025

AI Intimidation Tactics: CEOs Turn Flawed Technology Into Employee Fear Machine

ICT News - Jun 24, 2025

Tesla Robotaxi Finally Hits the Streets: $4.20 Rides That'll Make You Hold Your...

ICT News - Jun 24, 2025

World's First Flying Humanoid Robot Takes Flight

ICT News - Jun 24, 2025

When Closed Source Met Open Source: Bill Gates Finally Meets Linus Torvalds After...

Gadgets - Jun 23, 2025

COLORFUL SMART 900 AI Mini PC: Compact Power for Content Creation

ICT News - Jun 22, 2025

Neuralink Telepathy Chip Enables Quadriplegic Rob Greiner to Control Games with...

ICT News - Jun 20, 2025

Tesla vs Zoox vs Waymo: Who would win?

ICT News - Jun 19, 2025

Comments

Sort by Newest | Popular