Amazon's Facial Recognition Tech Matched Politicians To Criminals

Viswamitra Jayavant - Sep 11, 2019

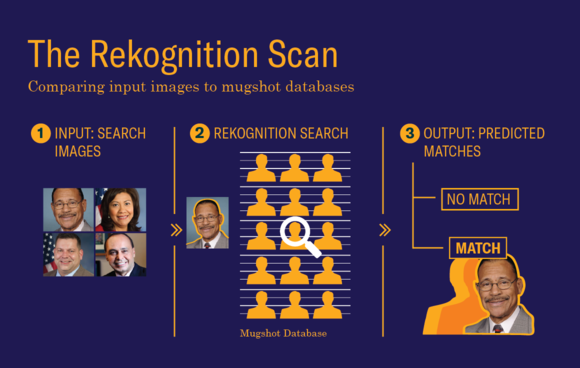

Amazon's Rekognition is a problematic piece of software that recently misidentified 26 Californian politicians for criminals during a test.

- Amazon's Redesigned Logo Looked Like Hitler's Mustache

- Jeff Bezos Stopped Mukesh Abmani's $3.4 Billion Deal To Protect His Dominance

- Jeff Bezos Led Amazon To Success With These 14 Principles

Facial recognition is the newest thing that the government is quite enthusiastic about. They have been pushing for its wide deployment for a while. But even when you don’t know the technical or legal details behind the move, it’s not hard to understand that this may be a bad thing.

The ACLU orchestrated a test just to see how effective the current facial recognition software that the U.S. law enforcement agencies are using: Amazon’s Rekognition. The results can’t be any more depressing (and morbidly humorous). It falsely identified 29 Californian lawmakers as criminals.

In a sense, the system’s not wrong - but we’ll leave the political jokes alone for now.

ACLU's Experiments

It’s not the first time that the ACLU created and ran this type of experiment. The first test was done last year showed the accuracy of Rekognition to be disastrous. Not only were the results incorrect, but they were also racially biased when the program was pushed to matching members of the Congress.

In the second test done recently, the ACLU set up a data set of 120 Californian politicians, pitching them against a database filled with 12,000 mugshots of criminals. False positives popped up 20% of the time.

Phil Ting is one of the politicians who were mismatched. A San Francisco Assembly Member, he’s now using the result to push for the ban of the implementation of facial recognition tech in police’s body cameras. During a press conference addressing the matter, he stated how the experiment proved that facial recognition tech is still not ready for use. He also cited how its inaccuracy can become problematic for people who are trying to interview for a job or trying to buy a home.

Amazon's Response

In response to the experiment, a spokesperson for Amazon told the press in defense of the program that ACLU had “[misused] and [misrepresented]” Rekognition in order to “make headlines.” The company said how when the program is used with a 99% confidence threshold as well as taking into account human-driven decisions, there is a vast array of benefits that the tech could bring to the table.

Still A Lot of Questions

There are numerous confusions that popped around Rekognition. When Amazon said that the program should be used with a 99% confidence threshold. Matt Cagle - an attorney for ACLU who collaborated with UC Berkeley to verify the results said that the organization did not use a 99% confidence threshold simply because it was not the default value in the settings of the program, which is fixed at 80%.

Amazon even went as far as pointing its finger towards a blog post that stated explicitly that Amazon’s Rekognition should not be used with any other confidence threshold than 99%. Yet, it’s strange how that’s not the default value in the program.

Featured Stories

ICT News - Feb 18, 2026

Google's Project Toscana: Elevating Pixel Face Unlock to Rival Apple's Face ID

Mobile - Feb 16, 2026

Xiaomi Launches Affordable Tracker to Compete with Apple's AirTag

ICT News - Feb 15, 2026

X Platform Poised to Introduce In-App Crypto and Stock Trading Soon

ICT News - Feb 13, 2026

Elon Musk Pivots: SpaceX Prioritizes Lunar Metropolis Over Martian Colony

ICT News - Feb 10, 2026

Discord's Teen Safety Sham: Why This Data Leak Magnet Isn't Worth Your Trust...

ICT News - Feb 09, 2026

PS6 Rumors: Game-Changing Specs Poised to Transform Console Play

ICT News - Feb 08, 2026

Is Elon Musk on the Path to Becoming the World's First Trillionaire?

ICT News - Feb 07, 2026

NVIDIA's Gaming GPU Drought: No New Releases in 2026 as AI Takes Priority

ICT News - Feb 06, 2026

Elon Musk Clarifies: No Starlink Phone in Development at SpaceX

ICT News - Feb 03, 2026

Elon Musk's SpaceX Acquires xAI in Landmark $1.25 Trillion Merger

Read more

Mobile- Feb 17, 2026

Anticipating the Samsung Galaxy S26 and S26+: Key Rumors and Specs

The Samsung Galaxy S26 series is on the horizon, sparking excitement among tech enthusiasts.

Mobile- Feb 16, 2026

Xiaomi Launches Affordable Tracker to Compete with Apple's AirTag

For users tired of ecosystem lock-in or high prices, the Xiaomi Tag represents a compelling, no-frills option that delivers core functionality at a fraction of the cost.

ICT News- Feb 18, 2026

Google's Project Toscana: Elevating Pixel Face Unlock to Rival Apple's Face ID

As the smartphone landscape evolves, Google's push toward superior face unlock technology underscores its ambition to close the gap with Apple in user security and convenience.

Comments

Sort by Newest | Popular