AI is Changing What We Consider to be Photography

Viswamitra Jayavant - Mar 01, 2019

AI is changing photography in the best possible way. By adding software into the factor that creates a good picture, smartphones can outgrow the physical challenge of its own hardware.

- New ‘Deep Nostalgia’ AI Allow Users To Bring Old Photos To Life

- Pilots Passed Out Mid-Flight, AI Software Got The Aircraft Back Up Automatically

- YouTube AI Mistakes Black And White In Chess For Racism

In the last couple of years, we have witnessed dramatic shifts in the market for smartphones. Both in term of design and prices, smartphones had evolved into something that would be beyond everyone’s wildest dream just a decade ago. With each iteration of smartphone released, the components that made them up seem only to be faster, more compact, and efficient than the previous generation. One of the components that had received much attention by smartphone makers is the camera module.

The End of an Era

But the age of fitting as compact an image sensor while retaining fidelity, or putting as many lenses as possible onto the phone had ended. Instead, manufacturers are quite certain that the ‘Next big thing’ in smartphone photography is Artificial Intelligence (AI). Evidently, the most recent improvement in smartphone’s camera technology occurred on the software level, rather than the hardware being lenses and sensors.

We can simply say that flagship handsets can deliver excellent picture quality was due to the fact that the integrated AI understood better what they’re looking at, rather than how the image sensors captured the moment and how the pictures are processed by the phone.

How It All Started

If you want to see for yourself how powerful the concoction of AI and photography is. Look no further than Google Photos, which should be available natively to all those who use an Android phone.

Launched in 2015, Google Photos was the result of many years of hard work by engineers at Google in their race to develop a consumer-friendly AI product. By allowing the programme to ‘learn’ how to categorize images using Google+ photos. Google Photos can arrange all of your images into a neat, searchable database.

In short, Google Photos has the ability to know what your house, your pet, and even you look like.

Google Photos was built upon the knowledge framework of DNNresearch. A technological firm acquired by Google in 2015. The AI nestled within Google Photos is a deep neural network that had been trained on millions of images already labeled by humans. In time, the AI will eventually be able to recognize visual cues on a pixel level. Giving it the ability to ‘recognize’ what different objects look like. The more data the programme is exposed to, the better it would be at recognizing images.

For example, by exposing the AI to images of a panda. The programme can understand what a panda is based on the colour of a panda’s fur, being black and white. Where the splotches of black and white furs are most commonly in relation to one another. In time, the AI will be able to understand pandas deep enough that it’d be able to distinguish between a panda and a Holland cow. Regardless of the glaring similarity between the two animals being the colours on their fur and hide.

That sounds like a lot of work. And yes, it is a lot of work. Way too much work for an atypical mobile phone to handle. That’s why most of the process had already been done at Google’s massive data centers. So once your images are uploaded, Google only needs to match the photos with preset models to give you a nearly instantaneous result.

Change of Hardware

Google wasn’t the only one who’s interested in this technology, however. And about a year after the debut of Google Photos and its revolutionary feature. Apple released its own version of Google Photos as a photo search function. Apple’s adaptation was based on a similar concept of machine learning. But the key difference between the two is that Apple’s version took the longer route.

Due to the company’s privacy commitment, instead of sending your images to the company’s servers (Thus, exposing you to privacy risks). Photos will be cataloged by your own device, and nowhere else. Granted, for the averagely sized album, it would take a day or two for the AI to finish arranging your album. But for those who are concerned about privacy, it’s not that significant of a trade-off in comparison to Google’s offering.

But we’ve diverged from the point. You’ve already seen how AI had already covertly infiltrated and existed in your smartphone for the last few years. Yet how AI is able to intelligently sort your images based on categories and subjects isn’t really that impactful once you consider how AI has changed the way pictures are captured in the first place.

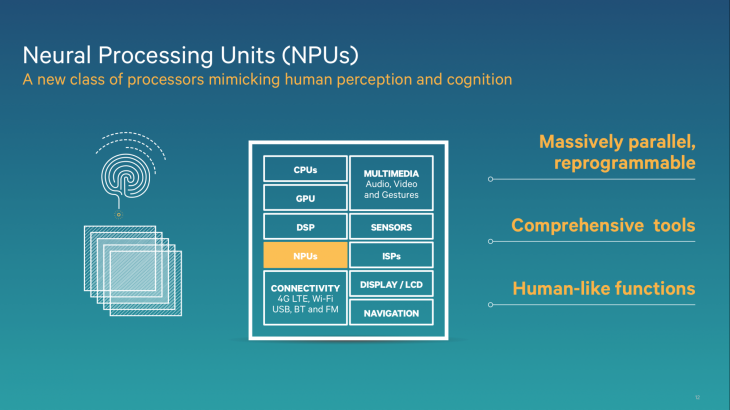

Hardware is reaching its limit. We’re already touching the top ceiling of physics by cramming smaller and more efficient sensors and lenses into gradually slimmer phones each year. Nonetheless, the quality of images is increased with each new release of smart devices. Engineers had outdone themselves in such fashion that smartphones nowadays can sometimes even take better pictures under the right conditions than profession photography gears. What set smartphone’s photographic component apart from traditional cameras is right within the heart of the smartphone. The SoC (System-on-Chip) aside from containing critical components such as the CPU and GPU necessary for the smartphone’s normal operation. It, under the influence of recent technological trend, has now also included a neural processing unit (NPU).

Computation Photography

The NPU is where the magic of modern smartphone photography occurred by allowing smartphones to practice what was called ‘Computational Photography’. Through different algorithms, the technique paved the way from the iPhone’s portrait mode, to excellent image enhancement in the Google Pixel.

It is the reason why iPhones can generate a faux depth-of-field effect through its mono-lensed (Xr) and dual-lensed (Xs, Xs Max) devices. Machine learning aided the iPhone’s camera modules in recognising people. The subject is applied with a depth map generated in real time by the phone. Allowing it to be isolated and a blur effect applied onto the background.

If you’ve juggled with Photoshop or any image manipulation tool, you would know that this isn’t something that’s new. However, the iPhone’s ability to do all of this in real time, and on a system as compact as a smartphone is the revolutionary aspect.

Google seems to be leading the pack in term of AI-assisted photography. With HDR+ shooting mode, an algorithm is applied to the image to enhance the quality far beyond what the image sensor could produce on its own.

Remember machine learning?

Google Pixel devices can learn from the photographs that it had taken. Thus, you can seriously expect the image quality to be better the more photos you shoot. So impressed by the quality of the photography suite that many professionals had turned to the Pixel as their primary photography device.

That’s not the only magic that AI-assisted photography can do. Google has just introduced Night Sight to the Pixel. Night Sight merges long exposure shots together and using algorithms to apply proper white balance and colours to details. The result is stunning: Low light photos appear just as bright and vibrant as optimal condition photos.

The new Pixel 3 is able to leverage best this new feature for its up-to-date hardware, though all generations of Pixel phones can use Night Sight. It’s the testament to how far software-base photography had evolved over the traditional hardware art.

Do Not Discount Hardware

Though we can’t really discount the hardware’s role, either. A synergy of hardware and software is how we’re going to move to even more brilliant results from now on.

You can see how it is on the Huawei’s new flagship Nova 4 and Honor’s View 20.

Both use a top-of-the-line 48MP IMX586 image sensor from Sony as the soul of their camera module. The sensor can produce pictures at a never before seen resolution that outweighs every competitor on the market. Still, compressing that much pixels into the cramped space of an average picture can be disastrous. With the intervention of what Honor called ‘AI Ultra Clarity’ mode. Deep neural networks once again saved the day by not only optimized the resolution of the image but also reconfigured the colour filter to reveal extra details in the image.

Ever since a camera was placed on a phone. The image signal processor had been the primary component that decides the final quality of the captured image. Now, it seems, the NPU is an addition that will certainly be commonplace in smartphones of the future. It’s entirely possible that the NPU’s role in the photographic performance of the device to be more and more substantial as computation photography advances. Huawei was the first to announce its move to place a dedicated AI processor onto its SoC, began with the Kirin 970. Despite this, Apple beat them to the title by getting the A11 Bionic onto the market first. Manufacturers are piling more and more attention onto AI, with the latest move being Google’s development of its in-house chip: Pixel Visual Core. Like I said, NPUs will eventually become common enough that it’d exist in virtually every smartphone manufacturer’s portfolio.

Not only NPUs, but also the entire system will need to advance in order to facilitate computation photography. Which is well-known for its demand for extremely high processing power. After all, Google Photos, in order to accomplish such feat, was trained on Google’s massive computer farms with processing power by orders of magnitude higher than the average smartphone. This is the reason why, though it may sound simple, machine learning computations in real time is still a thing that’s only available for cutting-edge, luxury phones.

The Promising Future

Neural engines have improved significantly from the efforts of engineers. As well as many techniques have been studied to reduce the processing workload of smartphone’s systems to speed up the introduction of computation photography into the wider public.

AI is one of the chief technological topics in the past few years. But the most practical application for it can be found right there in the palm-sized device in your pocket. The camera has become an inseparable part of a smartphone, and it is with AI that it would improve. And no longer the hardware itself.

Featured Stories

ICT News - Dec 25, 2025

The Visibility Concentration Effect: Why Half the Web Isn’t Qualified Anymore

ICT News - Jul 05, 2025

Windows 11 is Now the Most Popular Desktop OS in the World

ICT News - Jul 02, 2025

All About Florida’s Alligator Alcatraz: A Smart Move for Immigration Control

ICT News - Jun 25, 2025

AI Intimidation Tactics: CEOs Turn Flawed Technology Into Employee Fear Machine

ICT News - Jun 24, 2025

Tesla Robotaxi Finally Hits the Streets: $4.20 Rides That'll Make You Hold Your...

ICT News - Jun 24, 2025

World's First Flying Humanoid Robot Takes Flight

ICT News - Jun 24, 2025

When Closed Source Met Open Source: Bill Gates Finally Meets Linus Torvalds After...

Gadgets - Jun 23, 2025

COLORFUL SMART 900 AI Mini PC: Compact Power for Content Creation

ICT News - Jun 22, 2025

Neuralink Telepathy Chip Enables Quadriplegic Rob Greiner to Control Games with...

ICT News - Jun 20, 2025

Comments

Sort by Newest | Popular