A Brief Explanation Of How Deep Fusion Works On Photos

Aadhya Khatri - Jan 22, 2020

Like Smart HDR of Apple, Deep Fusion works based on scene and object recognition, and the eight pictures taken before the user capture the scene

- Best Gaming Phones 2025: Top Devices for Mobile Gaming

- Apple Kills Original HomePod, Focusing On HomePod Mini

- iPhone 12 Color Is Fading Away Quickly And No One Knows Why

Deep Fusion had stirred people’s curiosity even before it is released in iOS 13.2. Now, when users have some time familiarized themselves with the tech, let’s look at some of its underlying features.

Deep Fusion Is Not So Complicated

Like Apple's Smart HDR, Deep Fusion works based on scene and object recognition, and the eight pictures taken right before the user capture the scene.

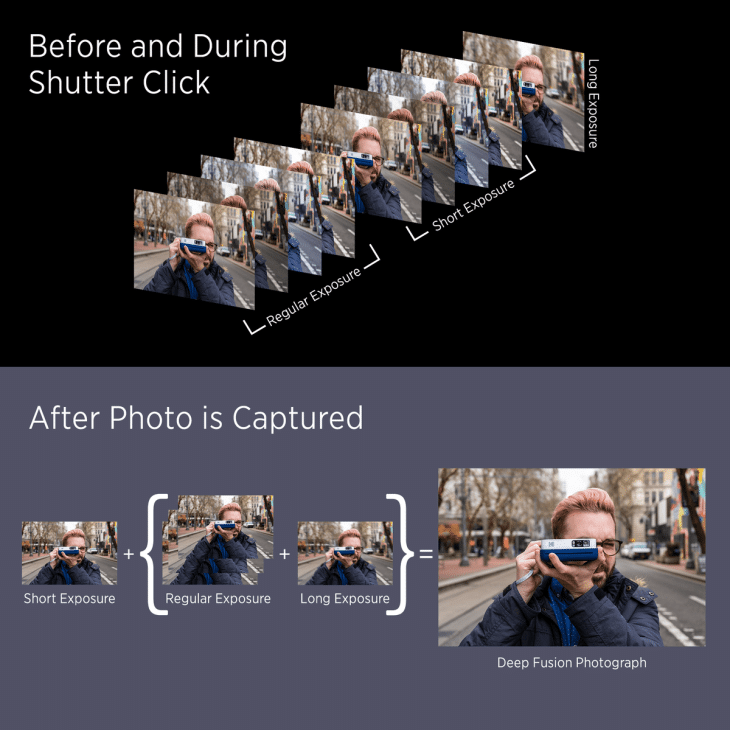

Now let’s talk about the eight images. Four of them are taken with a short exposure and the others are captured with the standard exposure. When users press the shutter button, the ninth picture is captured with a long exposure.

Since the short exposure can create the time-freezing effect and support details with high frequency, the sharpest of these images taken with it is picked for the next step.

The three pictures taken with the standard exposure usually yield the best tones, colors, and other data in low frequency. They are then fused with the images with long exposure to make one single photo with the best features.

This image and the one with the sharpest of the short exposure shots are then sent to a neural network, which picks out the best pixel and then represents the final image to the user. This approach can help eliminate noise, make the colors look accurate, and sharpen details.

The processing is done behind the scene so it will have minimal impact on your capture time.

According to Apple, sometimes you may see a picture being processed for about a second. Deep Fusion is not available for burst mode and only users of the iPhone 11 and 11 Pro have access to it.

It Just Works

Users do not have the choice to turn the feature on or off - Deep Fusion will be at work whenever possible.

It will be of no use when users use Apple’s ultrawide-angle lens. With the main lens at work, the company said that the feature will kick in under indoor light, in case the phone does not automatically offer the night mode.

With the telephoto lens, you can have Deep Fusion when taking pictures of something that is not too dark or too bright. These situations usually call for the work of the main sensor, not the telephoto camera.

The Results

Many users reported that the effect of Deep Fusion has been subtle on iPhone 11 lineup. In a test of an iPhone 11 with iOS 13.2 against another one running iOS 13.1 with no Deep Fusion on board, the difference is hard to notice.

However, when zooming in, users may notice finer details, not something you will be impressed at the first look, especially when you view the photo on the phone. What we most appreciate about it is that it can only improve the image, not make it worse.

Featured Stories

Features - Jan 29, 2026

Permanently Deleting Your Instagram Account: A Complete Step-by-Step Tutorial

Features - Jul 01, 2025

What Are The Fastest Passenger Vehicles Ever Created?

Features - Jun 25, 2025

Japan Hydrogen Breakthrough: Scientists Crack the Clean Energy Code with...

ICT News - Jun 25, 2025

AI Intimidation Tactics: CEOs Turn Flawed Technology Into Employee Fear Machine

Review - Jun 25, 2025

Windows 11 Problems: Is Microsoft's "Best" OS Actually Getting Worse?

Features - Jun 22, 2025

Telegram Founder Pavel Durov Plans to Split $14 Billion Fortune Among 106 Children

ICT News - Jun 22, 2025

Neuralink Telepathy Chip Enables Quadriplegic Rob Greiner to Control Games with...

Features - Jun 21, 2025

This Over $100 Bottle Has Nothing But Fresh Air Inside

Features - Jun 18, 2025

Best Mobile VPN Apps for Gaming 2025: Complete Guide

Features - Jun 18, 2025

A Math Formula Tells Us How Long Everything Will Live

Read more

Mobile- Feb 17, 2026

Anticipating the Samsung Galaxy S26 and S26+: Key Rumors and Specs

The Samsung Galaxy S26 series is on the horizon, sparking excitement among tech enthusiasts.

ICT News- Feb 18, 2026

Google's Project Toscana: Elevating Pixel Face Unlock to Rival Apple's Face ID

As the smartphone landscape evolves, Google's push toward superior face unlock technology underscores its ambition to close the gap with Apple in user security and convenience.

ICT News- Feb 19, 2026

Escalating Costs for NVIDIA RTX 50 Series GPUs: RTX 5090 Tops $5,000, RTX 5060 Ti Closes in on RTX 5070 Pricing

As the RTX 50 series continues to push boundaries in gaming and AI, these price trends raise questions about accessibility for average gamers.

Comments

Sort by Newest | Popular