Nvidia GTC 2020 Update RTX And A100 GPU Keynotes

Dhir Acharya - May 16, 2020

Nvidia GTC 2020 Update RTX And A100 GPU: Keynotes and information on the new A100 GPUs with 20 times stronger performance.

- NVIDIA's Gaming GPU Drought: No New Releases in 2026 as AI Takes Priority

- NVIDIA Fast Sync: How Does It Help Gaming? How To Enable It?

- NVIDIA Desktop Workstation Is As Powerful As A Data Center

Each time a new CPU or GPU is launched, it’s always touted to offer 2 or 3 times the speeds of current versions. But with its newest GPU, Nvidia has shockingly promised 20 times better performance compared with its predecessor, providing a big boost to cloud computing firms that will use those chips for their data centers. Earlier this week, the tech giant unveiled Nvidia GTC 2020 update RTX and a100 GPU.

Paresh Kharya, Director of product manager management for data center and cloud platforms at Nvidia, said during a Wednesday briefing with reporters:

“The Ampere architecture provides the greatest generational leap out of our eight generations of GPUs.”

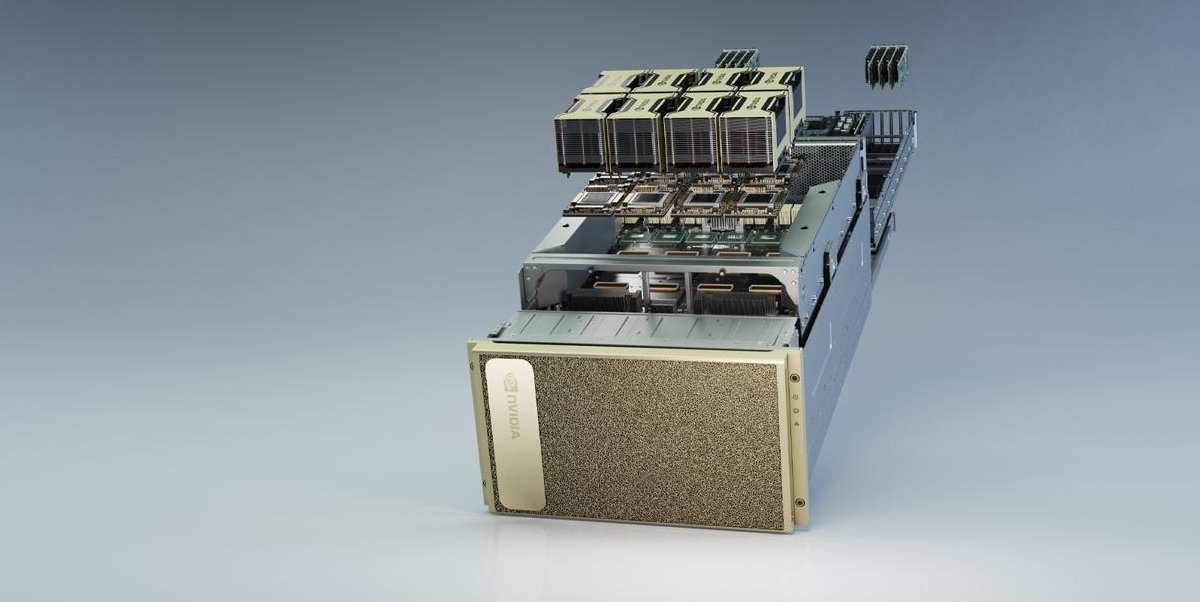

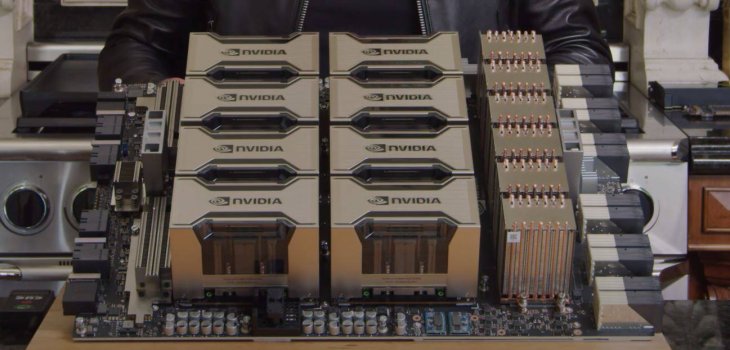

The Thursday event unveiled that the DGX A100 board consists of 8 A100s to make a super-GPU capable of working as a giant processor or separate GPUs for different tasks or users. It weighs over 22 kilograms and fit an oven, as in the teaser video you have seen before.

The A100 is designed to handle intensive tasks such as conversational AI, AI training, high-performance data analytics, scientific simulation, genomics, seismic modeling, as well as financial forecasting. Especially, the A100 will be used for exploring vaccines and cures for COVID-19 by helping researchers crunch data in days instead of years.

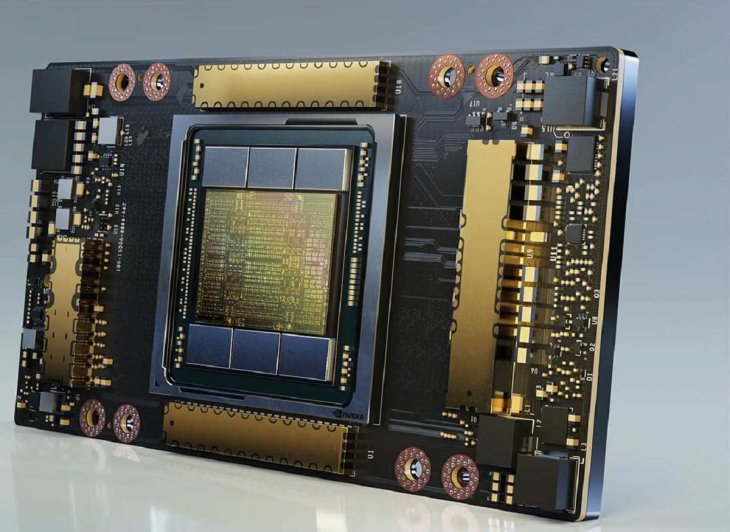

The design of the A100 is based on a 7nm one. One of the most important parts of manufacturing semiconductors is reducing the size of transistors, which, in turn, will improve the performance and battery life of a device. According to Nvidia, the Ampere architecture houses 54 billion transistors, making it the largest 7nm chip in the world. The A100 chip will give machines the powered needed to crunch a lot of data fast and make servers more flexible.

“It's going to unify that infrastructure into something much more flexible, much more fungible and increase its utility makes it a lot easier to predict how much capacity you need.”

Training AI

The new A100 GPU will be used by tech giants like Microsoft, Google, Baidu, Amazon, and Alibaba for cloud computing, with huge server farms housing data from around the world. Reddit and Netflix, like most online services, keep their websites alive using the cloud. Nvidia said Microsoft will be among the first firms to use the A100 GPU.

Then, the A100 will also benefit organizations like research institutions, labs, and universities. The GPU will help in training, operating AI systems. The flexibility of this tech allows firms to scale up or down their servers depending on their needs, and the A100 takes less time to teach an AI program.

Five miracles

The first one is its Ampere architecture. This is the first time Nvidia has unified the acceleration workload of the whole data center into a single platform, handling all data work from image processing to video analytics.

The second miracle is Nvidia’s 3rd-gen Tensor cores that improve high performance computing applications.

The third one is a multi-instance GPU, meaning companies that use the A100 to partition it into seven smaller GPUs. Therefore, companies can use the A100 in different sizes for different degrees of computing power depending on their specific needs.

The fourth is Nvidia’s 3rd-gen NVLink, a high-speed interconnect between GPUs. The NVLink used for the A100 offers twice the speed, which allows multiple GPUs to be connected and operate as a giant GPU.

The fifth one is structural sparsity, an efficiency technique that harnesses the inherently sparse nature of Artificial Intelligence math for two-fold the performance.

Other details

At the Thursday event, Nvidia also introduced the DGX A100 system. The DGX A100 comes with 8 A100 GPUs connected through NVLink, which can handle intensive AI computing. The DGX A100 can deliver 5 petaflops of AI performance, stack an entire data center’s power and capabilities into one system.

Nvidia also updated its software that’s linked to the A100 GPU, including its deep recommender application framework Merlin, its multimodal conversation AI system Jarvis, as well as the high-performance computing SDK for debugging and optimizing the code for A100.

With its complete GPU software stack and tools, Jarvis helps developers build real-time conversational bots with the capability to understand terminology specific to each firm and customers. For example, a bank app with in-built Jarvis could understand financial terms.

Jarvis is expected to come handy during the COVID-19 pandemic as more and more people are working remotely. In a press release, Nvidia CEO Huang said:

“Conversational AI is central to the future of many industries, as applications gain the ability to understand and communicate with nuance and contextual awareness. Nvidia Jarvis can help the healthcare, financial services, education and retail industries automate their overloaded customer support with speed and accuracy.”

>>> Xnxubd 2020 Nvidia: Leaks Suggest 30-Series GPUs Will Perform Up To 70% Faster

Featured Stories

Gadgets - Jul 21, 2025

COLORFUL Launches iGame Shadow II DDR5 Memory for AMD Ryzen 9000 Series

Gadgets - Jun 23, 2025

COLORFUL SMART 900 AI Mini PC: Compact Power for Content Creation

Review - Jun 18, 2025

Nintendo Switch 2 Review: A Triumphant Evolution Worth the Wait

Gadgets - Jun 18, 2025

Starlink: Why It’s a Big Deal for U.S. Internet in 2025

Gadgets - Jun 17, 2025

How Custom PC Setups Support India's Esports Athletes in Global Competition

Gadgets - Jun 12, 2025

Lava Prowatch Xtreme Launches with Google Fit Integration

Gadgets - Jun 07, 2025

Fujifilm Instax Mini 41 Launches in India: Stylish Instant Camera Now Available...

Mobile - Jun 07, 2025

Realme C73 5G Launches in India: Budget 5G Phone Starts at ₹10,499

Gadgets - Jun 07, 2025

OnePlus 13s Makes Indian Debut: Compact Flagship Brings Premium Features at...

Gadgets - Jun 07, 2025

OnePlus Pad 3 Debuts with Snapdragon 8 Elite Chip, India Launch Confirmed

Read more

Mobile- Feb 17, 2026

Anticipating the Samsung Galaxy S26 and S26+: Key Rumors and Specs

The Samsung Galaxy S26 series is on the horizon, sparking excitement among tech enthusiasts.

ICT News- Feb 15, 2026

X Platform Poised to Introduce In-App Crypto and Stock Trading Soon

X has been laying the groundwork for this expansion.

Mobile- Feb 16, 2026

Xiaomi Launches Affordable Tracker to Compete with Apple's AirTag

For users tired of ecosystem lock-in or high prices, the Xiaomi Tag represents a compelling, no-frills option that delivers core functionality at a fraction of the cost.

Comments

Sort by Newest | Popular