These Faces Illustrates The Advancement In AI Image Generation In Just Four Years

Harin - Dec 18, 2018

The developments of artificial intelligence are moving at such a surprising pace that we often can’t keep track of. But one field where we can see the progress is the utilization of neural networks to generate fake images.

- New ‘Deep Nostalgia’ AI Allow Users To Bring Old Photos To Life

- Pilots Passed Out Mid-Flight, AI Software Got The Aircraft Back Up Automatically

- YouTube AI Mistakes Black And White In Chess For Racism

The developments of artificial intelligence are moving at such a surprising pace that we often can’t keep track of. But one field where we can see the progress is the utilization of neural networks to generate fake images.

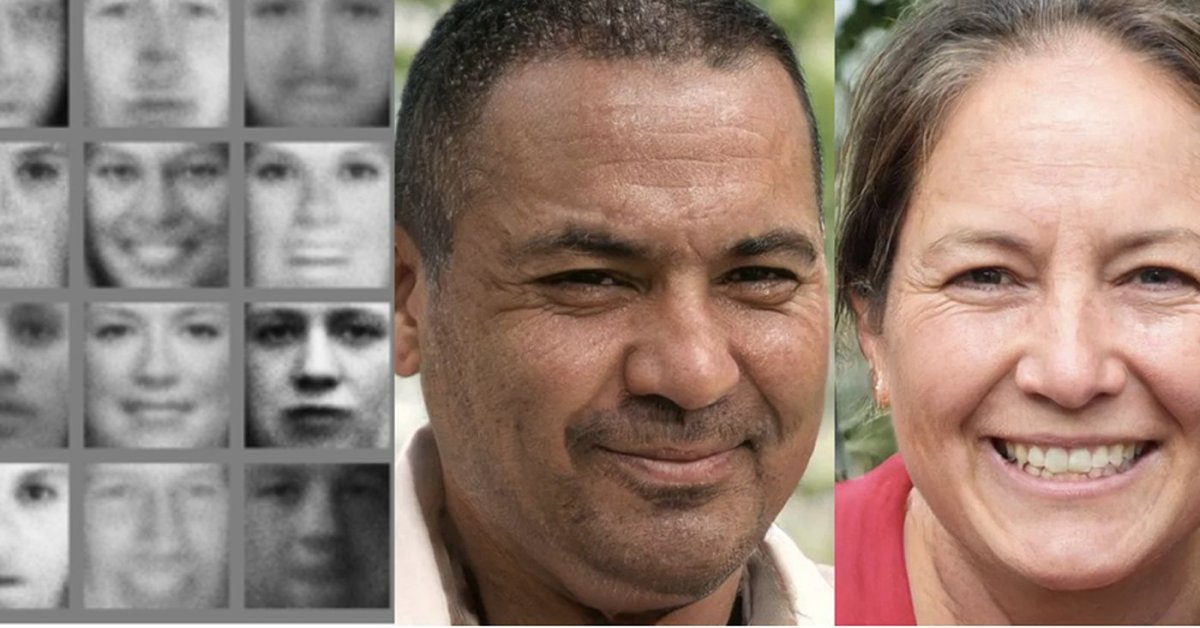

In this image, you can see how the four-year progress of AI image generation. The black-and-white images on the left were generated by AI in 2014. The color faces from the right were published this month using the same method but look completely different regarding image quality.

These realistic looking faces are the work of Nvidia’s researchers. In their published paper last week, they described how they created these images by modifying the AI tool called the generative adversarial network (GAN). Let’s have a look at these images below. If you had no idea that they were not real images, could you spot the difference?

What’s interesting about this is that these faces can be customized. Engineers at Nvidia integrated into their work a style transfer method. With this method, the characteristics of different images can be blended together. The term might sound familiar as it is used in numerous image filters on popular apps like Facebook and Prisma.

By using this style transfer method, researchers from Nvidia can custom-make these faces to an impressive extent. The grid below is an example of this. Facial features from another person (right side column) were blended into real people images on the top row. Different characteristics like hair color and skin are incorporated creating a completely new person.

Of course, many questions have been raised around AI’s ability to generate realistic looking faces.

Experts have been expressing their concern over how AI’s ability to create fake images can affect society. These tools could be exploited for propaganda and misinformation, which might ruin the public’s trust in evidence in picture form. This can lead to damage to the justice system and politics. Unfortunately, these issues are not discussed in the paper from Nvidia. When being asked about this, the company said they could reveal any information yet.

We should not, in any sense, overlook these warnings. Many female famous figures’ faces have been swapped into pornographic pictures posted in “deepfakes”. There are people willing to make use of these tools in controversial ways.

However, using AI image generation requires time as well as expertise. Researchers at Nvidia spent a week on training their AI machine on eight Tesla GPUs to be able to create these faces.

We can also look for clues to detect the fakes. Coder and artist, Kyle McDonald in one of his recent blog post, wrote a list of clues. For instance, it is extremely difficult to fake hair. It will either look too regular or too blurry. Ai machines don’t quite get the symmetry of the human face. Ears are often placed at unusual levels or eyes could be in different colors. AI machines are also not so great at generating numbers or text.

However, if you read this post’s beginning, these clues might be a huge comfort. The work from Nvidia illustrates how advanced AI is becoming. Therefore, it won’t take long until algorithms to avoid these hints will be created.

Fortunately, experts are considering new methods for digital images authentication. Several solutions have already been carried out such as camera apps that will put geocodes into pictures to verify the location and date of the photos. It is clear that there is going to be a battle between image authentication and AI fakery. At least, currently, AI is taking the lead.

Featured Stories

Features - Jul 01, 2025

What Are The Fastest Passenger Vehicles Ever Created?

Features - Jun 25, 2025

Japan Hydrogen Breakthrough: Scientists Crack the Clean Energy Code with...

ICT News - Jun 25, 2025

AI Intimidation Tactics: CEOs Turn Flawed Technology Into Employee Fear Machine

Review - Jun 25, 2025

Windows 11 Problems: Is Microsoft's "Best" OS Actually Getting Worse?

Features - Jun 22, 2025

Telegram Founder Pavel Durov Plans to Split $14 Billion Fortune Among 106 Children

ICT News - Jun 22, 2025

Neuralink Telepathy Chip Enables Quadriplegic Rob Greiner to Control Games with...

Features - Jun 21, 2025

This Over $100 Bottle Has Nothing But Fresh Air Inside

Features - Jun 18, 2025

Best Mobile VPN Apps for Gaming 2025: Complete Guide

Features - Jun 18, 2025

A Math Formula Tells Us How Long Everything Will Live

Features - Jun 16, 2025

Comments

Sort by Newest | Popular