Smarter Tech Tools Needed To Fight Child Sex Abuse Images, Google Expert Says

Viswamitra Jayavant - Feb 01, 2019

The Internet has made massive distribution of child abuse/exploit images and videos extremely easy. Investigators and content moderators need new tech tools to help them combat this.

- Google Offers Voluntary Buyouts to US Employees Amid AI Push

- Google SynthID: Everything You Need to Know About AI Content Detection

- NotebookLM Mobile App: Enterprise AI Capabilities Now Available on iOS and Android

One of the worst jobs you can possibly get in the tech industry is ‘Community Manager’ or ‘Content Moderator.’ It's because of the nature of the job that requires people to see photos that can leave behind deep emotional scars.

The Problem

Have you ever wondered why these disturbing contents, from graphical images to child pornography rarely - if ever - appear on your Facebook’s feed?

That’s the Content Moderators at work. Although companies who have to combat this problem try to minimize the trauma-inducing workload of their moderators as much as possible by relying on machine learning. Automatic recognition software is not 100% foolproof. After all, they sometimes mistake ordinary contents for inappropriate ones. So humans are still required to manually sift through these images and videos to differentiate between normal and inappropriate contents.

The reality was that, according to the filing of the moderators:

The Solution

The rapid advancement of technology and the ubiquity of smart devices make the job of sorting through these contents more and more difficult. It is more convenient than ever to create a video with a smartphone as cheap as $100. So the ‘rate of production’ that these disturbing images and videos were made was staggering. The anonymity that the Internet provides also allows these contents to change from one hand to another without ever having to worry about the laws.

That’s the reason why researchers think that the only way to stem this darkness is to rely on artificial intelligence and custom-built software. Elie Bursztein - director of the anti-abuse team at Google, believed that successful development of these kinds of technology can be the transformative step to rescuing abused children and arresting predators.

To give you a sense of how impossible it would be to employ just humans to stop the spread of child pornographic materials. Exploitation images of children had exploded to 18.4 million in 2018. That is 6,000 times more than the amount of these images approximated in 1998. The statistics are based on a survey conducted by the National Centre for Missing and Exploited Children (NCMEC), a government agency whose primary job is to track child abuse and exploit contents and collaborate with law enforcement agencies to hunt down the perpetrators.

Race to Develop Predator-Fighting Technologies

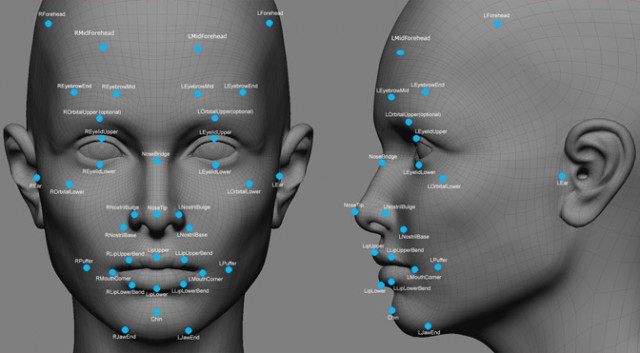

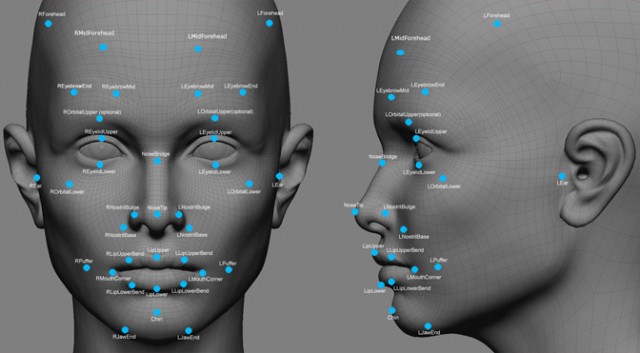

In an attempt to bring an efficient moderation tool into reality, Bursztein collaborated with NCMEC and Thorn to develop the necessary technologies. The incredible processing speed of artificial intelligence means investigators can find relevant materials that could help solve crime cases far quicker and more efficient. One of the promising tool that could help in the battle against child abuse - pornographic contents is a facial analysis programme capable of telling investigators how many features of the perpetrators’ faces are visible, or software that’s capable of prioritizing images.

As not all images are created equal, this tool can remove pictures that cannot be processed or aren’t valuable to the investigation such as photos where the perpetrators’ faces are blurry or not visible. Aggregating the best information surrounding the case so that investigators can focus on pieces of evidence that truly matter. It could help to increase drastically the speed at which the case can be solved and the child rescued.

Lastly, deep learning can string all relevant images together, for example, by grouping images where each frame shows a different part of the perpetrator’s face. Deep learning can assimilate the information and give investigators the actual face of the predator.

Psychological Defence

It is important to note that, at least for the moment, the technology still cannot replace human investigators entirely. They will still need to manually look at the images and decide for themselves the usability of each image in the process of investigation, or that the result of the programmes can be trusted or else. Deciding whether someone is guilty or innocence is not a job that should be left to machines to decide.

Aside from developing investigative tools, Burszstein and his team also developed techniques to reduce psychological strains of investigators and reviewers. The disturbing content of the photos can be alleviated somewhat by employing a method called: ‘Style transfer.’ By filtering the photo so that the image appears as if an illustration. Investigators can still review the images and determine the contents without being deeply affected by the images’ nature.

The technique has still yet to be properly trialed and, for now, is only a proposal. But in an independent experiment conducted by Bursztein and his team, it was found that ‘Style transfer’ reduce psychological strain of moderators by up to 16%.

Featured Stories

Features - Jul 01, 2025

What Are The Fastest Passenger Vehicles Ever Created?

Features - Jun 25, 2025

Japan Hydrogen Breakthrough: Scientists Crack the Clean Energy Code with...

ICT News - Jun 25, 2025

AI Intimidation Tactics: CEOs Turn Flawed Technology Into Employee Fear Machine

Review - Jun 25, 2025

Windows 11 Problems: Is Microsoft's "Best" OS Actually Getting Worse?

Features - Jun 22, 2025

Telegram Founder Pavel Durov Plans to Split $14 Billion Fortune Among 106 Children

ICT News - Jun 22, 2025

Neuralink Telepathy Chip Enables Quadriplegic Rob Greiner to Control Games with...

Features - Jun 21, 2025

This Over $100 Bottle Has Nothing But Fresh Air Inside

Features - Jun 18, 2025

Best Mobile VPN Apps for Gaming 2025: Complete Guide

Features - Jun 18, 2025

A Math Formula Tells Us How Long Everything Will Live

Features - Jun 16, 2025

Comments

Sort by Newest | Popular