New AI Hides Your Emotions From Other AI Systems

Kumari Shrivastav - Sep 13, 2019

Smart speaker devices are infringing on our privacy by detecting our emotions. Therefore, researchers are coming up with a solution to protect users.

- Google's Project Genie: Premium Subscribers Unlock Interactive AI-Generated Realms

- New ‘Deep Nostalgia’ AI Allow Users To Bring Old Photos To Life

- Pilots Passed Out Mid-Flight, AI Software Got The Aircraft Back Up Automatically

When people communicate, they will present a variety of nonverbal feelings such as exasperated groans or terrified shrieks. From arousal to disgust, agony to ecstasy, several subtle emotions can be told from vocal cues and inflections. Just a simple saying can deliver lots of meanings. And this personal data would be so valuable when being collected by any company.

When a person speaks to voice assistants which are connected to the Internet, his speech is likely recorded. As a result, researchers from the Imperial College London have created a layer between users and the cloud that their personal information is uploaded to. This AI helps users hide their emotions by translating sentimental speech into unemotional speech automatically. The research paper called “Emotionless: Privacy-Preserving Speech Analysis for Voice Assistant” has been published. You can find it on the arXiv preprint server.

Amazon and other companies are always putting efforts into developing AI’s emotional detecting capabilities. Different personal information such as age, physical condition, gender, stress levels, confidence, etc., can be revealed from our voices, and smart speaker providers don’t miss this point.

According to Ranya Aloufi, the leader of the research, by pinning down human’s emotional states as well as personal preferences, this accurate emotional reader may infringe our privacy significantly.

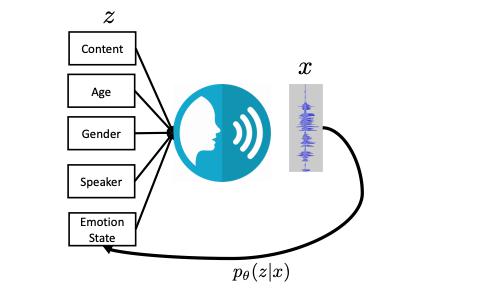

Researchers stated in an experiment that their method helps to decrease the emotional detecting by 96% despite the reduction of speech recognition accuracy with 35% of word error rate. The method they use to mask emotion includes gathering speech, analyzing data, then extracting any emotional factors from the core signal. After that, an AI program studies on these indicators, replacing any emotional signal in the speech, then flatten them. Lastly, with the help of AIs outputs, your normalized speech is regenerated by a voice synthesizer. The cloud will store the data.

What makes a machine human-like is understanding different emotions, which is many futurists and companies' longtime goal. In recent years, AI speech engineers consider emotionality as a critical goal. SER (Speech Emotion Recognition), which first appeared in the late 1990s, is an older field than any famous AI speech recognition system on Google Home, Siri, or Alexa gadgets.

In 2018, Huawei stated that it was developing an AI for detecting emotions to deploy to is voice assistant programs. The Huawei vice president of software engineering said to CNBC:

Back in May, speech engineers of Alexa revealed research where adversarial networks were used to detect emotions in the digital assistant. They wrote in their research that emotion

Those firms also hope the highly sensitive data can help them sell more specifically-targeted ads.

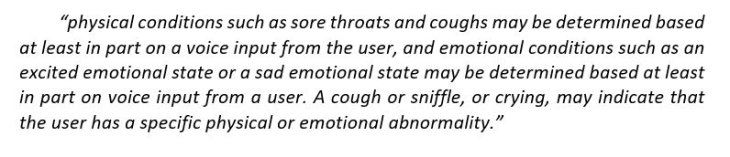

In the patent of emotional speech detection in 2017, Amazon uses sickness as an instance:

After receiving the cues, a virtual assistant, Alexa for example, will use them for offering extremely specific advertisements of medications and other particular products, in combination with your purchase and browsing history. Aloufi said that consumers can’t do much to protect their personal data from being used like this unless more privacy protections are provided by smart home gadgets such as Google Home and Alexa. She shared:

Featured Stories

Features - Jan 29, 2026

Permanently Deleting Your Instagram Account: A Complete Step-by-Step Tutorial

Features - Jul 01, 2025

What Are The Fastest Passenger Vehicles Ever Created?

Features - Jun 25, 2025

Japan Hydrogen Breakthrough: Scientists Crack the Clean Energy Code with...

ICT News - Jun 25, 2025

AI Intimidation Tactics: CEOs Turn Flawed Technology Into Employee Fear Machine

Review - Jun 25, 2025

Windows 11 Problems: Is Microsoft's "Best" OS Actually Getting Worse?

Features - Jun 22, 2025

Telegram Founder Pavel Durov Plans to Split $14 Billion Fortune Among 106 Children

ICT News - Jun 22, 2025

Neuralink Telepathy Chip Enables Quadriplegic Rob Greiner to Control Games with...

Features - Jun 21, 2025

This Over $100 Bottle Has Nothing But Fresh Air Inside

Features - Jun 18, 2025

Best Mobile VPN Apps for Gaming 2025: Complete Guide

Features - Jun 18, 2025

A Math Formula Tells Us How Long Everything Will Live

Read more

ICT News- Feb 15, 2026

X Platform Poised to Introduce In-App Crypto and Stock Trading Soon

X has been laying the groundwork for this expansion.

Mobile- Feb 16, 2026

Xiaomi Launches Affordable Tracker to Compete with Apple's AirTag

For users tired of ecosystem lock-in or high prices, the Xiaomi Tag represents a compelling, no-frills option that delivers core functionality at a fraction of the cost.

Mobile- Feb 17, 2026

Anticipating the Samsung Galaxy S26 and S26+: Key Rumors and Specs

The Samsung Galaxy S26 series is on the horizon, sparking excitement among tech enthusiasts.

Mobile- Feb 14, 2026

Android 17 Beta 1 Now Available for Pixel Devices

While Android 17 Beta 1 doesn't introduce flashy consumer-facing changes yet, it lays the groundwork for a more robust and flexible platform.

Comments

Sort by Newest | Popular