This Decoder Can Turn Signals The Brain Makes Into Speech

Aadhya Khatri - May 06, 2019

Listeners can determine the speaker's gender and intonation by listening to the speech the decoder makes

- Amazon's Redesigned Logo Looked Like Hitler's Mustache

- Jeff Bezos Stopped Mukesh Abmani's $3.4 Billion Deal To Protect His Dominance

- Jeff Bezos Led Amazon To Success With These 14 Principles

For so long, conditions and diseases like amyotrophic lateral sclerosis, Parkinson, throat cancer, or paralysis can lead to a complete loss of voice for their victims. But now, as the result of the latest technology, scientists have created a machine that can translate brain activity into speech.

He works extensively in brain mapping to protect vital areas for speech.

This breakthrough gives hope to people who have lost their voice and currently depend on communication means that are too slow to maintain a normal conversation. What Stephen Hawking used is the prime example of how incompetent speech synthesizers are in helping patients speak their mind. They work by spelling out each letter at a time according to users’ movements of eyes or muscles on their faces. While a typical person with a normally-functioning brain can say about 100 to 150 words, these machines can facilitate an average of 8 words in the same amount of time.

The New Technique Turns Brain Signals Into Speech

There have been several efforts in the past to translate brain activity to understand speech, but none of them yields a prominent result. What scientist did was to find out how the brain reacts when speech is made.

What differentiates Chang and his partners from previous researchers is their approach. They looked into the parts of the brain that are in charge of making the tongue, lips, throat, and jaw work together to produce speech.

According to Gopala Krishna Anumanchipalli, a Postdoctoral Scholar in the Department of Neurological Surgery, UCSF, they thought that if these parts are responsible for movements to make the sounds instead of the sounds themselves, they should work on the way to decode what they emit. Anumanchipalli is also the lead author of this finding, which is published on Nature.

That is the underlying principle of this new method, but in order to decipher these signals, the scientist must face a huge obstacle, which is the high level of complexity in the movements our body makes to deliver specific messages.

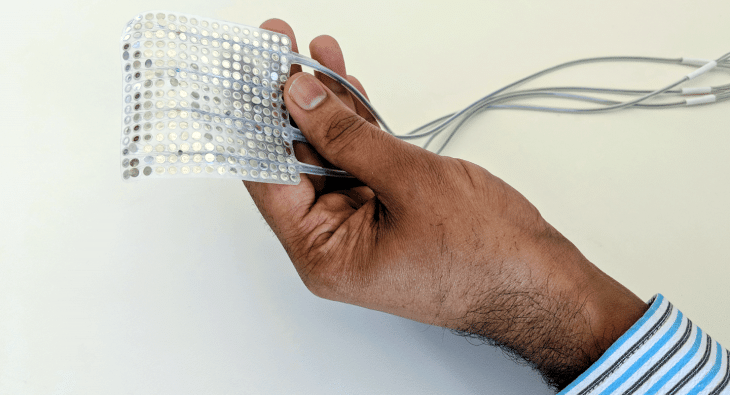

The research had the participation of five volunteers who were suffering from epilepsy and were subjected to operations. To prepare for the surgeries, these patients have electrodes inserted into their brain to give surgeons an idea of what causes their seizures. After the implantation of electrodes has been done. The scientists asked the five volunteers to read out loud a few hundred sentences while they monitor and keep track of the activity of the brain.

What the scientists are after is to unravel speech with a process consisting of two steps. The first one is to decipher signals from the brain to trigger movements; the other one is to decode these movements into sounds.

Scientists only need to work on the first step because they have already had an extensive collection of how movements are connecting to sounds.

These data are then used on a machine learning algorithm so that it can form links between brain signals and the body’s movements. With this technology, the brain can make a semblance of the human voice.

The team has made sample audio to demonstrate their finding. The sounds are quite similar to a person’s voice but under the impact of a foreign accent.

The team also conducted another test to determine the comprehensibility, which involved a large number of people using Amazon’s Mechanical Turk to transcribe the audios. The participants listened to 100 sentences and were given a pool of random and targeted words, amounting to 25. The accuracy rate of this test is 43%.

The decoder does face difficulty in making sounds like “b” and “p” but has certain success with “z” and “sh” with the sex and inflection are communicated accurately.

According to Watkins, these obstacles would not interfere with conversations. This happens in reality too, and listeners can get used to the way one person speaks and make more correct guesses when they do not hear clearly what that person is saying.

This technology has a prominent practical application as the algorithm is able to decipher the sentences it was not trained to recognize.

There is one more crucial thing to do though. The scientists must find out if it is possible for a person who has already lost their voice can learn to use the machine if it cannot train with that person’s voice.

Potential To Resharpen The Rapier

According to him, after suffering a stroke, what he lost was the ability to crack a joke.

Baudy passed away in 1997 and ever since he got the accident, he communicated mainly by blinking his left eye. Things do seem to improve a bit from his time, but the main problem is still there.

What Hawking used worked in the same way. It detects movements and translates them into letters that form words. These machines can predict what comes next to make the process faster, but it is still no match for the typical speed of a normal human.

This breakthrough could be a good piece of news for people who have lost their voice as a result of accidents or illness. The best case scenario is these people can converse normally with the full meanings and thoughts conveyed precisely as well as other more tricky parts of speech.

Featured Stories

Features - Jan 29, 2026

Permanently Deleting Your Instagram Account: A Complete Step-by-Step Tutorial

Features - Jul 01, 2025

What Are The Fastest Passenger Vehicles Ever Created?

Features - Jun 25, 2025

Japan Hydrogen Breakthrough: Scientists Crack the Clean Energy Code with...

ICT News - Jun 25, 2025

AI Intimidation Tactics: CEOs Turn Flawed Technology Into Employee Fear Machine

Review - Jun 25, 2025

Windows 11 Problems: Is Microsoft's "Best" OS Actually Getting Worse?

Features - Jun 22, 2025

Telegram Founder Pavel Durov Plans to Split $14 Billion Fortune Among 106 Children

ICT News - Jun 22, 2025

Neuralink Telepathy Chip Enables Quadriplegic Rob Greiner to Control Games with...

Features - Jun 21, 2025

This Over $100 Bottle Has Nothing But Fresh Air Inside

Features - Jun 18, 2025

Best Mobile VPN Apps for Gaming 2025: Complete Guide

Features - Jun 18, 2025

A Math Formula Tells Us How Long Everything Will Live

Read more

Mobile- Feb 16, 2026

Xiaomi Launches Affordable Tracker to Compete with Apple's AirTag

For users tired of ecosystem lock-in or high prices, the Xiaomi Tag represents a compelling, no-frills option that delivers core functionality at a fraction of the cost.

ICT News- Feb 15, 2026

X Platform Poised to Introduce In-App Crypto and Stock Trading Soon

X has been laying the groundwork for this expansion.

Mobile- Feb 17, 2026

Anticipating the Samsung Galaxy S26 and S26+: Key Rumors and Specs

The Samsung Galaxy S26 series is on the horizon, sparking excitement among tech enthusiasts.

Comments

Sort by Newest | Popular