The Site Determines If Texts Were Written By A Human Or A Bot

Anita - Mar 12, 2019

A new algorithm named GLTR has been created to detect whether words were written by a human or a bot in the fight against fake news and misinformation.

- The Ultimate Tech Betrayal: OpenAI's Nuclear Revenge Plot Against Sugar Daddy Microsoft

- Microsoft Builds Massive Supercomputer For Elon Musk's Company: 285,000 CPU Cores And 10,000 GPUs

- To Fight Fake News Amid CO.VID-19, WhatsApp Now Lets You Forward A Message Once Only

OpenAI’s developers confirmed last month that an algorithm for text generating named GPT-2 had been successfully created, which they said to be extremely dangerous to launch into our world because it is probably used to contaminate the global web with interminable material written by a computer program.

However, recently, a scientist team from Harvard University and MIT-IBM Watson AI Lab created another algorithm named GLTR which can determine the possibility that any specific text passage could be written by algorithms such as GPT-2, which is a very interesting escalation in the fight against spam.

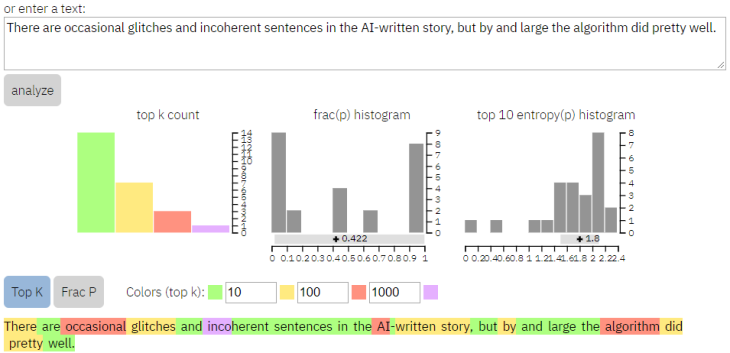

The algorithm will determine the possibility that the words could be written by a bot like GPT-2 and highlight them with different colors

The developers demonstrated the way GPT-2 is probably used to write news articles that are fictitious but convincing by sharing one that the tool had written about researchers discovering unicorns when OpenAI released this algorithm.

GLTR takes advantage of the same examples to read the last output and forecast whether GPT-2 or a human wrote it. Because GPT-2 writes the sentences by forecasting which words have to come with each other, the GLTR decides if a sentence has the word which a bot writing fake news would have chosen.

The scientists from Harvard, MIT, and IBM working on the project created a website which lets people check GLTR by themselves. The algorithm will determine the possibility that the words could be written by a bot like GPT-2 and highlight them with different colors. For instance, if the words are highlighted green, it means they are written by GPT-2 while other colors like red, yellow, especially purple mean the words are probably written by a human.

How the method works

But, Janelle Shane, an AI researcher, realized that GLTR also does not succeed in correctly determining other algorithms for generating text in addition to GPT-2 of OpenAI.

After checking the site with her text generator, the researcher found that GLTR wrongly determined that the resultant words were too hard to predict so that they had to be written by a human, which suggests that we will need more than this tool in the continuing fight against fakes news and misinformation.

Featured Stories

Features - Jan 29, 2026

Permanently Deleting Your Instagram Account: A Complete Step-by-Step Tutorial

Features - Jul 01, 2025

What Are The Fastest Passenger Vehicles Ever Created?

Features - Jun 25, 2025

Japan Hydrogen Breakthrough: Scientists Crack the Clean Energy Code with...

ICT News - Jun 25, 2025

AI Intimidation Tactics: CEOs Turn Flawed Technology Into Employee Fear Machine

Review - Jun 25, 2025

Windows 11 Problems: Is Microsoft's "Best" OS Actually Getting Worse?

Features - Jun 22, 2025

Telegram Founder Pavel Durov Plans to Split $14 Billion Fortune Among 106 Children

ICT News - Jun 22, 2025

Neuralink Telepathy Chip Enables Quadriplegic Rob Greiner to Control Games with...

Features - Jun 21, 2025

This Over $100 Bottle Has Nothing But Fresh Air Inside

Features - Jun 18, 2025

Best Mobile VPN Apps for Gaming 2025: Complete Guide

Features - Jun 18, 2025

A Math Formula Tells Us How Long Everything Will Live

Read more

Mobile- Feb 17, 2026

Anticipating the Samsung Galaxy S26 and S26+: Key Rumors and Specs

The Samsung Galaxy S26 series is on the horizon, sparking excitement among tech enthusiasts.

Mobile- Feb 16, 2026

Xiaomi Launches Affordable Tracker to Compete with Apple's AirTag

For users tired of ecosystem lock-in or high prices, the Xiaomi Tag represents a compelling, no-frills option that delivers core functionality at a fraction of the cost.

ICT News- Feb 18, 2026

Google's Project Toscana: Elevating Pixel Face Unlock to Rival Apple's Face ID

As the smartphone landscape evolves, Google's push toward superior face unlock technology underscores its ambition to close the gap with Apple in user security and convenience.

Comments

Sort by Newest | Popular