Tech That Can Detect Your Emotions May Not Be A Good Thing At All

Viswamitra Jayavant - Mar 22, 2019

So-called 'Emotion Detection System' is considered to be the next, new thing in the tech industry. Yet, it's appearance in our lives may not be as good as it sounded.

- Google's Project Genie: Premium Subscribers Unlock Interactive AI-Generated Realms

- AI Intimidation Tactics: CEOs Turn Flawed Technology Into Employee Fear Machine

- David vs. Goliath 2.0: How DeepL's NVIDIA SuperPOD Makes Google Translate Look Like Dial-Up Internet

Despite the big talks about Artificial Intelligence and ‘machine consciousness’, machines - at least at the moment - do not have emotions. Machines, however, are better at us by orders of magnitude at interpreting data. And the reasoning is that since emotions are often subtly expressed on our faces. Machines would be able to recognise emotions better than us with their sensitive sensors and sophisticated software.

Tech Industry's 'Next New Thing'

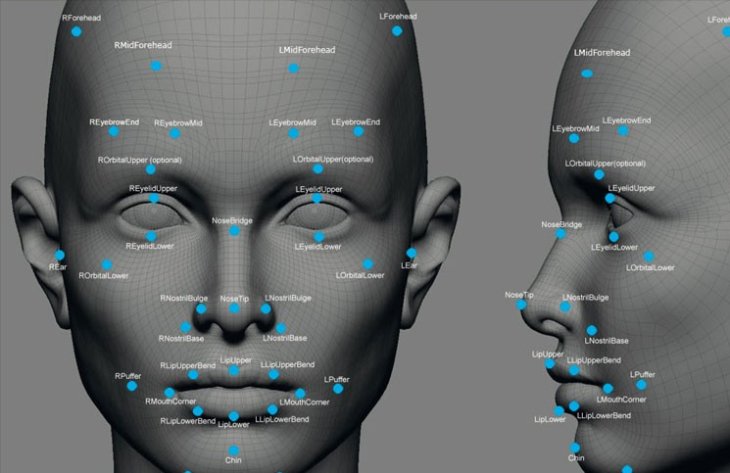

How a person's face looks like to computers.

At least, that’s the hope in the tech industry.

A lot of players in the industry - small like startups and big like Amazon and Microsoft alike - are buying into this concept. Companies are offering so-called ‘emotion analysis’ products that, as its name might suggest, are able to read what a person is feeling. That, of course, is a marvel in term of engineering and programming. However, it might not be that at all positive once implemented to the decoding processes in ordinary life such as an interview for a job or aiding in making acceptance decision in education.

The System

Basically, ‘emotion detection systems’ is a commingling of two technologies.

The first is what’s called ‘computer vision’. In order to analyse a person’s face for his/her emotions, of course, the computer must be able to see them in the first place. The second is an artificial intelligence system capable of conducting the necessary calculations to ‘guess’ what the person is feeling.

For example, it could label someone grinning widely or having relaxed musculatures being ‘Happy’. Vice versa, someone with an aggressive scowl and a sneer can be interpreted as ‘Angry’.

Current Use

Though these systems might sound fictitious, or at least, not yet widely used. They are already implemented in a wide range of applications, by a large number of corporate users. To name a few: IBM, Unilever, and even Disney are fielding such techs. To be specific, Disney is using the system to gauge the reaction of the audience during screening to have an idea of what they feel about the movie.

The tech is gradually moving away from corporate users and finding its use in smaller, every-day applications.

The Downside

You don’t even need to be an expert on the matter to see the benefits such a system could bring to corporates such as IBM and Unilever. Tech companies are always thirsty for market data, and such systems are god-sent methods of collecting customers’ reaction, much like how Disney did it.

But the benefits only truly show when these systems are extremely accurate. But that’s not the case here.

A study by researchers from Wake Forest University in December detailed their trials of several emotion detection systems. What they found was that these systems typically attribute negative emotions to black people rather than white people, in spite of the fact that the degree of joy on either’s faces is similar. It is a significant finding, especially when one of the systems tested came from Microsoft.

The Inaccuracy Of AI

This is only one out of the many cases of AI misinterpreting data when collected from those with darker skins. Inadvertently recreating racial biases in the process.

The system is inherently faulty, and as Whittaker said, if it’s fielded now, it might do more harm than good for society.

Featured Stories

Features - Jan 29, 2026

Permanently Deleting Your Instagram Account: A Complete Step-by-Step Tutorial

Features - Jul 01, 2025

What Are The Fastest Passenger Vehicles Ever Created?

Features - Jun 25, 2025

Japan Hydrogen Breakthrough: Scientists Crack the Clean Energy Code with...

ICT News - Jun 25, 2025

AI Intimidation Tactics: CEOs Turn Flawed Technology Into Employee Fear Machine

Review - Jun 25, 2025

Windows 11 Problems: Is Microsoft's "Best" OS Actually Getting Worse?

Features - Jun 22, 2025

Telegram Founder Pavel Durov Plans to Split $14 Billion Fortune Among 106 Children

ICT News - Jun 22, 2025

Neuralink Telepathy Chip Enables Quadriplegic Rob Greiner to Control Games with...

Features - Jun 21, 2025

This Over $100 Bottle Has Nothing But Fresh Air Inside

Features - Jun 18, 2025

Best Mobile VPN Apps for Gaming 2025: Complete Guide

Features - Jun 18, 2025

A Math Formula Tells Us How Long Everything Will Live

Read more

Mobile- Feb 16, 2026

Xiaomi Launches Affordable Tracker to Compete with Apple's AirTag

For users tired of ecosystem lock-in or high prices, the Xiaomi Tag represents a compelling, no-frills option that delivers core functionality at a fraction of the cost.

ICT News- Feb 18, 2026

Google's Project Toscana: Elevating Pixel Face Unlock to Rival Apple's Face ID

As the smartphone landscape evolves, Google's push toward superior face unlock technology underscores its ambition to close the gap with Apple in user security and convenience.

Mobile- Feb 17, 2026

Anticipating the Samsung Galaxy S26 and S26+: Key Rumors and Specs

The Samsung Galaxy S26 series is on the horizon, sparking excitement among tech enthusiasts.

Comments

Sort by Newest | Popular