Marijuana or Broccoli? Facebook Demonstrates AI's Challenges With An Example

Ravi Adwani - May 04, 2019

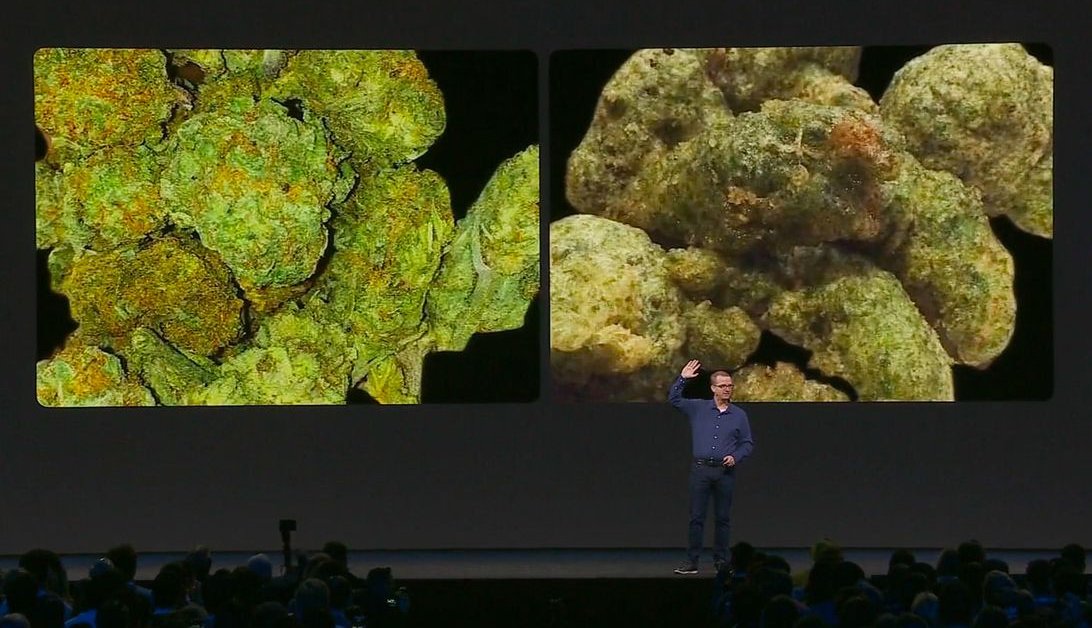

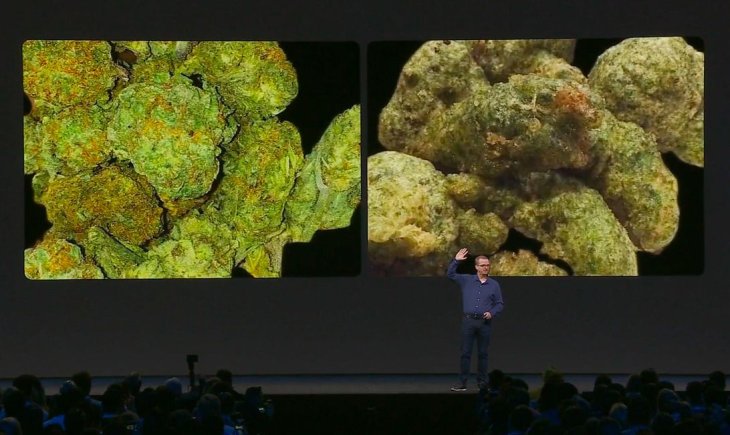

Facebook used an example where AI need to distinguish the differences between 2 similar images of marijuana and broccoli to illustrate AI's challenges.

- Instagram Launches A Lite Version For Users In Rural And Remote Areas

- Australia Passed New Law That Requires Facebook And Google To Pay For News Content

- Facebook Stops Showing Australian Content, Even From Government Sites

Facebook has been using both human employees and artificial intelligence (AI) to deal with misinformation hate speech as well as election meddling. Now, the social network that is putting the AI into a serious test.

The role of AI

Recently, the tech giant has encountered a series of criticism for its failed attempt to remove a terrorist attack live video. Content moderators of that video suffered serious trauma by looking at such violent and gruesome content.

That being said, AI has helped Facebook in many ways such as flagging spams, nudity, fake accounts and many similar offensive contents. In general, AI has been showing mixed results. On April 1, Mike Schroepfer (Facebook CTO) admitted that AI didn’t solve all the complex problems out there. However, the company has been making progress, of which Schroepfer made some remarks at the F8 conference.

Marijuana or broccoli?

In front of a group of audiences, Schroepfer displayed 2 surprisingly similar pictures of marijuana and broccoli tempura. Since Facebook implemented a new algorithm, a computer can spot the differences and distinguish the two. According to Schroepfer, we can see similar techniques in recognizing suspicious images from the social network.

Facebook obviously doesn’t allow recreational drugs on their platform. Hence, people have been finding ways around the system, using regular packaging of goods or treats. The company managed to discover that and AI can now flag such images for further investigation.

On the other hand, Facebook AI had another challenge to face. As for the implementation of Portal’s smart camera, they had to be fair for different genders, ages, and skin tones.

Dealing with hate speech

Additionally, Facebook is giving less supervision to computer’ training to deal with elections' hate speech. As AI is in charge of moderating content, it’s fair to balance concerns from all groups of people. Also, it’s totally possible for people to have different opinions on what’s hate speech or misinformation.

In order to determine this, according to Isabel Kloumann, a data scientist at Facebook, it’s important to identify the person and their target. Moreover, it’s a must for Facebook to treat people of different groups equally as well as to balance associated safety concerns.

Featured Stories

Features - Jul 01, 2025

What Are The Fastest Passenger Vehicles Ever Created?

Features - Jun 25, 2025

Japan Hydrogen Breakthrough: Scientists Crack the Clean Energy Code with...

ICT News - Jun 25, 2025

AI Intimidation Tactics: CEOs Turn Flawed Technology Into Employee Fear Machine

Review - Jun 25, 2025

Windows 11 Problems: Is Microsoft's "Best" OS Actually Getting Worse?

Features - Jun 22, 2025

Telegram Founder Pavel Durov Plans to Split $14 Billion Fortune Among 106 Children

ICT News - Jun 22, 2025

Neuralink Telepathy Chip Enables Quadriplegic Rob Greiner to Control Games with...

Features - Jun 21, 2025

This Over $100 Bottle Has Nothing But Fresh Air Inside

Features - Jun 18, 2025

Best Mobile VPN Apps for Gaming 2025: Complete Guide

Features - Jun 18, 2025

A Math Formula Tells Us How Long Everything Will Live

Features - Jun 16, 2025

Comments

Sort by Newest | Popular