Deepfake AI Changes What People Say On Video With Transcript Editing

Harin - Jun 13, 2019

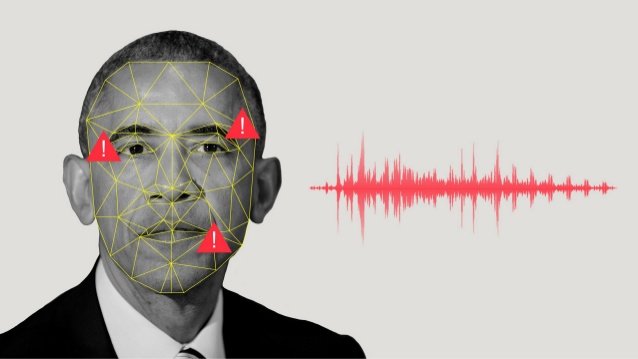

Researchers have developed an algorithm simplifying the process of creating a deepfake to a terrifying degree, making a video’s subject “say” any edits made to the clip’s transcript.

- IDC Report Predicts Surging Smartphone Prices Due to Global RAM Shortage

- Samsung Links Galaxy S26 Price Hikes to AI Memory Supply Issues

- Anthropic Blacklisted by US Department of War: Trump Orders Federal Ban Over AI Safeguards Dispute

Creating a convincing deepfake now only requires your typing ability. AI’s recent advances have made it easier to make audio or video clips in which a person would say or do things they did not actually say or do.

Researchers have worked on an algorithm simplifying deepfake creating process to a frightening degree. The algorithm allows the subject of a video to say any edits that are made to the transcript of the video clip. Even its creators are worried about the terrifying outcome if bad people get their hands on this technology.

The researchers who are from Princeton University, the Max Planck Institute for Informatics, Stanford University, and Adobe describes the working mechanism of their new algorithm in a paper which was published on the website of Stanford scientist Ohad Fried.

First, what the AI does is analyzing a source a video with a real person speaking. However, it doesn’t only look at their words. It even identifies the phonemes, the utters, and how the person looks when they speak.

In English, there are about 44 phonemes. The researchers said that the AI only needs 40 minutes of video clips to have all the needed pieces to make a person appear to be saying anything.

The only thing a person has to do is editing the video’s transcript. After that, a deepfake will be generated by the AI, which will then match the rewritten transcript by putting together the mouth movements and the necessary sounds.

Based on the video that shows how the new algorithm works, it appears to be suitable for minor changes. However, even researchers are concerned that some people might use the new algorithm for more destructive uses.

In their paper, they write:

Featured Stories

Features - Jan 29, 2026

Permanently Deleting Your Instagram Account: A Complete Step-by-Step Tutorial

Features - Jul 01, 2025

What Are The Fastest Passenger Vehicles Ever Created?

Features - Jun 25, 2025

Japan Hydrogen Breakthrough: Scientists Crack the Clean Energy Code with...

ICT News - Jun 25, 2025

AI Intimidation Tactics: CEOs Turn Flawed Technology Into Employee Fear Machine

Review - Jun 25, 2025

Windows 11 Problems: Is Microsoft's "Best" OS Actually Getting Worse?

Features - Jun 22, 2025

Telegram Founder Pavel Durov Plans to Split $14 Billion Fortune Among 106 Children

ICT News - Jun 22, 2025

Neuralink Telepathy Chip Enables Quadriplegic Rob Greiner to Control Games with...

Features - Jun 21, 2025

This Over $100 Bottle Has Nothing But Fresh Air Inside

Features - Jun 18, 2025

Best Mobile VPN Apps for Gaming 2025: Complete Guide

Features - Jun 18, 2025

A Math Formula Tells Us How Long Everything Will Live

Read more

ICT News- Mar 02, 2026

IDC Report Predicts Surging Smartphone Prices Due to Global RAM Shortage

This development underscores the broader ripple effects of the AI boom on everyday technology, highlighting the interconnected nature of global semiconductor supply chains.

ICT News- Feb 28, 2026

Anthropic Blacklisted by US Department of War: Trump Orders Federal Ban Over AI Safeguards Dispute

The story is developing. Federal agencies have been instructed to begin transition planning immediately.

ICT News- Mar 01, 2026

Samsung Links Galaxy S26 Price Hikes to AI Memory Supply Issues

This development highlights the broader challenges faced by the tech industry as it integrates artificial intelligence into everyday consumer electronics.

Comments

Sort by Newest | Popular