Bumble Will Deploy AI For Unwanted Nudes Detection

Harin - Apr 25, 2019

Dating app Bumble is launching an AI-assisted "private detector" to warn users about lewd images and weed them out of the platform.

- Google's Project Genie: Premium Subscribers Unlock Interactive AI-Generated Realms

- New ‘Deep Nostalgia’ AI Allow Users To Bring Old Photos To Life

- Pilots Passed Out Mid-Flight, AI Software Got The Aircraft Back Up Automatically

Very soon AI will remove any NSFW photos you receive from a match on Bumble. The dating app on which women need to be the one to start the conversation said it is introducing what is called a “private detector” to detect lewd images. The announcement was made in a press release on April 24.

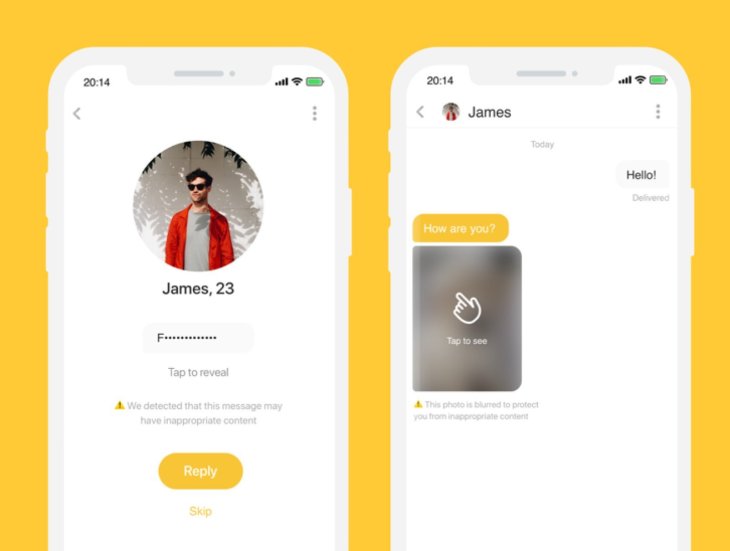

Starting from June, an AI-assisted “private detector” will screen all images sent on Bumble. If it suspects a photo to be inappropriate or lewd, users can choose to block, view, or report to moderators before opening it.

Many women encounter unwanted sexual advances on the internet. And the figure for these cases increases significantly on dating apps. A Consumers’ Research study conducted in 2016 suggested that 57% of women said they felt harassed while being on dating apps, while only 21% of men felt so.

The difference between Bumble and Tinder or Hinge is that the app lets its users send photos to their matches. These images are blurred out, and to view them, recipients need to press on them. By doing this, users are somewhat safe from sexual photos, but it seems like it is not enough for the company though.

In a press release, Andreev said:

According to Bumble, the effectiveness of its “private detector” can reach 98%. A spokesperson of Bumble said that the AI tool would also be capable of identifying gun pictures and shirtless mirror selfies.

The spokesperson added that an AI tool for harmful language and inappropriate message detection is under development. But Bumble will not be the first company to utilize for profiles and messages filtering. Tinder has also been using an AI tool to detect “red-flag language and images.”

Featured Stories

Features - Jan 29, 2026

Permanently Deleting Your Instagram Account: A Complete Step-by-Step Tutorial

Features - Jul 01, 2025

What Are The Fastest Passenger Vehicles Ever Created?

Features - Jun 25, 2025

Japan Hydrogen Breakthrough: Scientists Crack the Clean Energy Code with...

ICT News - Jun 25, 2025

AI Intimidation Tactics: CEOs Turn Flawed Technology Into Employee Fear Machine

Review - Jun 25, 2025

Windows 11 Problems: Is Microsoft's "Best" OS Actually Getting Worse?

Features - Jun 22, 2025

Telegram Founder Pavel Durov Plans to Split $14 Billion Fortune Among 106 Children

ICT News - Jun 22, 2025

Neuralink Telepathy Chip Enables Quadriplegic Rob Greiner to Control Games with...

Features - Jun 21, 2025

This Over $100 Bottle Has Nothing But Fresh Air Inside

Features - Jun 18, 2025

Best Mobile VPN Apps for Gaming 2025: Complete Guide

Features - Jun 18, 2025

A Math Formula Tells Us How Long Everything Will Live

Read more

Mobile- Feb 16, 2026

Xiaomi Launches Affordable Tracker to Compete with Apple's AirTag

For users tired of ecosystem lock-in or high prices, the Xiaomi Tag represents a compelling, no-frills option that delivers core functionality at a fraction of the cost.

Mobile- Feb 17, 2026

Anticipating the Samsung Galaxy S26 and S26+: Key Rumors and Specs

The Samsung Galaxy S26 series is on the horizon, sparking excitement among tech enthusiasts.

ICT News- Feb 18, 2026

Google's Project Toscana: Elevating Pixel Face Unlock to Rival Apple's Face ID

As the smartphone landscape evolves, Google's push toward superior face unlock technology underscores its ambition to close the gap with Apple in user security and convenience.

Comments

Sort by Newest | Popular