Autonomous Vehicles Find It More Difficult To Detect People With Darker Skin

Viswamitra Jayavant - Mar 09, 2019

If it’s left unfixed, you may find yourself more dangerous to cross the streets if you have darker skins than those with fairer complexes.

- Indian Police To Install AI Cameras To Prevent Crime Of Rape

- Wearing This T-Shirt And You Are "Invisible" To Every Facial Recognition Camera

- Indian Police Used Facial Recognition To Arrest Over 1,100 People In A Riot Last Month

Facial recognition is still a relatively new technology despite its rising popularity in a variety of products from smartphones to autonomous vehicles. Smartphones like the new iPhones are making it more and more trivial as days passed just like with fingerprint sensors. However, just like any ‘new’ technologies, there will be problems.

No one didn’t actually think that the problem with facial recognition system unintentionally touched a sensitive topic. Racism.

We have already known for quite some time now that these systems struggle with recognising people with darker skin colours. But software like Amazon’s Rekognition aren’t very pressing. Although they can be a mild inconvenience for those with darker skin tones, they can be improved in time.

That’s not something we can confidently say to facial recognition systems in charge of autonomous vehicles.

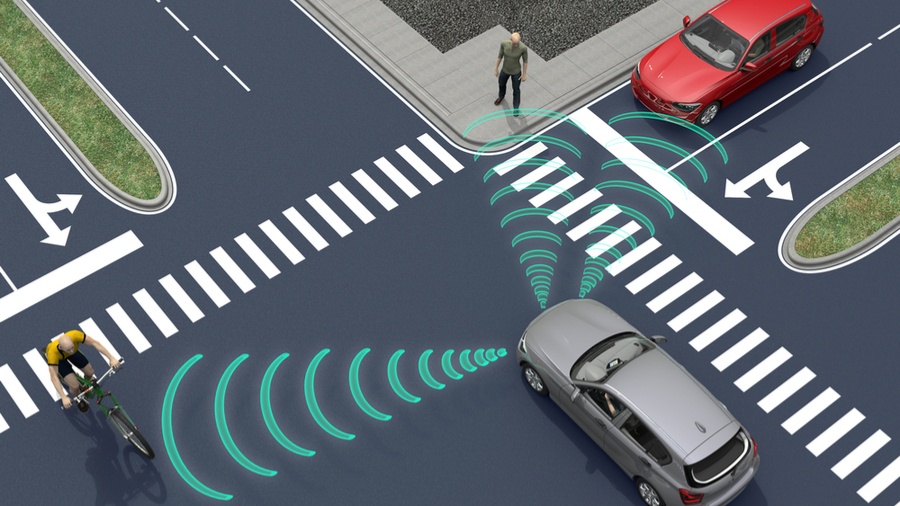

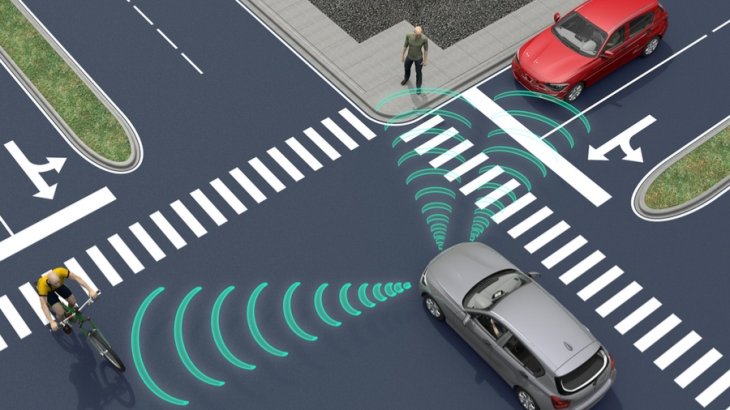

These artificially intelligent systems are tasked with recognising signs of pedestrians and minimise risks to both the vehicle and them. Usually, when a car spots a pedestrian in front of it and they both are on a collision course, it will brake the vehicle before the collision.

Now the thing is that the system, more often than not, failed to recognise people with darker skin tones. Needless to say that despite AI does not have the capacity of thoughts to express prejudices, the sign is worrying.

Uniformly Poorer Performance

A paper published by researchers from the Georgia Institute of Technology on arXiv detailing their analysis of eight AI models from different modern, top-of-the-line object detection systems. In other words, these systems are responsible for detecting objects, pedestrians, or road signs in autonomous vehicles.

The baseline to test these systems was what's known as the Fitzpatrick scale. It is a scale commonly used to classify human’s skin colours. Researchers print out images of pedestrians separated into two categories, one's fairer on the scale, and the other darker.

The result is worrying. All models exhibited “uniformly poorer performance” when they’re tested against images of pedestrians with darker skin tones on the scale.

An average decrease of 5% accuracy was calculated when these systems are faced off against the category of pedestrians with darker skins. Even when the researchers have taken into account environmental variables such as whether the photos were taken during the day or night.

A Simple Solution

It is fortunate that the problem was spotted early in a world that’s moving slowly but surely to a time when autonomous vehicles took over the roads. If it’s left unfixed, you may find yourself more dangerous to cross the streets if you have darker skins than those with fairer complexes.

Thankfully, the solution is not that complex. What we (Manufacturers) ought to do is to include more images and data sets of darker skinned pedestrians for the system to train on, and then, set priority for the system to focus more on looking out for these images that it had found it harder to detect.

Featured Stories

Features - Jan 29, 2026

Permanently Deleting Your Instagram Account: A Complete Step-by-Step Tutorial

Features - Jul 01, 2025

What Are The Fastest Passenger Vehicles Ever Created?

Features - Jun 25, 2025

Japan Hydrogen Breakthrough: Scientists Crack the Clean Energy Code with...

ICT News - Jun 25, 2025

AI Intimidation Tactics: CEOs Turn Flawed Technology Into Employee Fear Machine

Review - Jun 25, 2025

Windows 11 Problems: Is Microsoft's "Best" OS Actually Getting Worse?

Features - Jun 22, 2025

Telegram Founder Pavel Durov Plans to Split $14 Billion Fortune Among 106 Children

ICT News - Jun 22, 2025

Neuralink Telepathy Chip Enables Quadriplegic Rob Greiner to Control Games with...

Features - Jun 21, 2025

This Over $100 Bottle Has Nothing But Fresh Air Inside

Features - Jun 18, 2025

Best Mobile VPN Apps for Gaming 2025: Complete Guide

Features - Jun 18, 2025

A Math Formula Tells Us How Long Everything Will Live

Read more

Mobile- Feb 17, 2026

Anticipating the Samsung Galaxy S26 and S26+: Key Rumors and Specs

The Samsung Galaxy S26 series is on the horizon, sparking excitement among tech enthusiasts.

Mobile- Feb 16, 2026

Xiaomi Launches Affordable Tracker to Compete with Apple's AirTag

For users tired of ecosystem lock-in or high prices, the Xiaomi Tag represents a compelling, no-frills option that delivers core functionality at a fraction of the cost.

ICT News- Feb 18, 2026

Google's Project Toscana: Elevating Pixel Face Unlock to Rival Apple's Face ID

As the smartphone landscape evolves, Google's push toward superior face unlock technology underscores its ambition to close the gap with Apple in user security and convenience.

Comments

Sort by Newest | Popular