The Facing-Reading AI Helps To Detect Whether Suspects Are Telling Lies Or Not

Jyotis - Jul 01, 2019

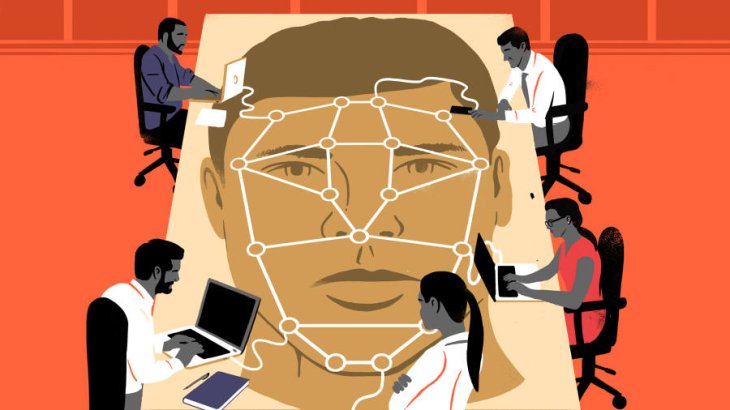

The company developed this AI system to detect human’s emotions from fear, surprise to anger. All of these emotions originate from micro-expressions that can’t be seen by naked eyes.

- 4 Ways AI Could Change The Mobile Gaming Industry

- This South Korean YouTuber Is The Result Of Deepfake Technology

- New ‘Deep Nostalgia’ AI Allow Users To Bring Old Photos To Life

The research of the US psychologist Paul Ekman that specializes in researching facial expressions has been able to detect humans’ lies for over 40 years. However, artificial intelligence (AI) may be soon a perfect alternative in the upcoming time.

Although the United States has been among the first nations to deploy automated technologies to learn how to comprehend secret reactions and emotions of suspects, the technique, in fact, is still young.

A lot of entrepreneurial ventures are under the investment and development to improve its accuracy and efficiency, as well as to limit the probability of false signals.

According to a report from The Times, a startup company from the United Kingdom named Facesoft has revealed it managed to build a database containing 30 crores images of faces. It is an AI system that creates many of these images based on models of human brains.

The company developed this system to detect human’s emotions from fear, surprise to anger. All of these emotions originate from micro-expressions that can’t be seen by naked eyes.

As the Chief Executive Officer and co-founder of Facesoft, Allan Ponniah said to The Times

The Facesoft CEO now works as a reconstructive and plastic surgeon in London.

His company has worked with the Mumbai police in an effort to monitor crowds and identify the growing mob dynamics via the AI system. In addition, Facesoft has introduced its initiative to the UK police forces.

In fact, the application of AI technologies in the police forces has sparked lots of contrary opinions in recent days. A group of researchers from Apple, Amazon, Alphabet, Microsoft, and Facebook reported in April of this year that lots of current algorithms which help police decide who should get probation, parole or bail, as well as which are designed to help judges decide what sentence to give an offender, turn out to be possibly opaque, biased, or even don’t work.

According to the Partnership on AI (PAI), such AI systems have been widely used in the United States and created their own marks in many other countries. As per the technology industry consortium, it doesn’t support the use of these AI systems.

Featured Stories

Features - Jan 23, 2024

5 Apps Every Creative Artist Should Know About

Features - Jan 22, 2024

Bet365 India Review - Choosing the Right Platform for Online Betting

Features - Aug 15, 2023

Online Casinos as a Business Opportunity in India

Features - Aug 03, 2023

The Impact of Social Media on Online Sports Betting

Features - Jul 10, 2023

5 Most Richest Esports Players of All Time

Features - Jun 07, 2023

Is it safe to use a debit card for online gambling?

Features - May 20, 2023

Everything You Need to Know About the Wisconsin Car Bill of Sale

Features - Apr 27, 2023

How to Take Advantage of Guarantee Cashback in Online Bets

Features - Mar 08, 2023

White Label Solutions for Forex

Review - Jul 15, 2022

Comments

Sort by Newest | Popular